Boosting Efficiency and Performance for Automotive, Networking, and Cloud Computing

Bernhard Friebe, Senior Director of FPGA Software Solutions Marketing, Intel Corporation, and James Reinders, HPC Enthusiast

There couldn’t be a better time to examine field programmable gate arrays (FPGAs) (Figure 1). A new era in computing is emerging thanks to the new programmability of FPGAs. With the onslaught of data in the world, there’s an incredible need for the power-efficient computing available with custom silicon designs—but with the flexibility available with the flexibility of FPGAs.

In this article, we share our thoughts on what it takes to truly enable FPGAs for software developers across all fields—including three that we explicitly discuss:

- Automotive

- Networking (e.g., 5G)

- End-to-end cloud computing (e.g., the data center)

Figure 1. Intel® Stratix® 10 FPGA

We dive a little deeper into enabling the data center by discussing the acceleration stack and the open programmable acceleration engine (OPAE). We don’t dive into any particular domain, such as machine learning, which a complete solutions stack enables through encapsulation of FPGA IP (think library routines implemented to use an FPGA). However, we do provide some links to explain more about the new world of accessible FPGA programming that Intel is leading.

What’s an FPGA?

An FPGA is essentially a blank slate waiting for us to draw a circuit design onto it. We do this by writing a description for the digital circuit we want, compiling it (FPGA developers call this synthesizing) into a configuration file (called a bitfile) and loading it into the FPGA. Once loaded, an FPGA behaves like the digital circuit we designed.

FPGAs are never permanently programmed. They don’t remember their program (bitfile/bitstream) when powered off. In most systems, the FPGA is loaded at power-up—either from firmware on the board with the FPGA, or programmatically by the host processor. The FPGA can be reloaded any time we want to change it.

The highly parallel architecture of FPGAs means that running computations on an FPGA will give better performance, lower power, lower latency, and higher throughput than software. Imagine being able to configure a hardware realization of a function and use that in our program. As we’ll discuss, the hottest topic in the FPGA world is making FPGA programming more accessible to software developers. Intel is leading the way to making this happen.

The Data Explosion

Three examples where Intel® FPGAs are key to delivering faster speeds, ultra-low latency, power-efficiency, and enormous flexibility are autonomous and assisted driving, accelerated networking (including 5G and software-defined networking, or SDN), and cloud computing (including high-performance deep neural network, or DNN inference). In all three usages, big data is part of why the parallel processing architecture of FPGAs is so critical.

Automated Vehicles

Automated vehicles, including those offering autonomous driving, are possible because of a combination of computation in the car, the networking, and the data center. Automated vehicles generate and use huge amounts of data to navigate safely. It takes about 1GB/second of real-time processing, while the time from sensing to reacting must be less than one second for safety reasons. For example, Intel processing power is part of the 2018 Audi A8* Autonomous Driving System. In that design, Intel FPGAs handle the object fusion, map fusion, parking, pre-crash, processing, and functional safety aspects of the self-driving car.

Networking and 5G

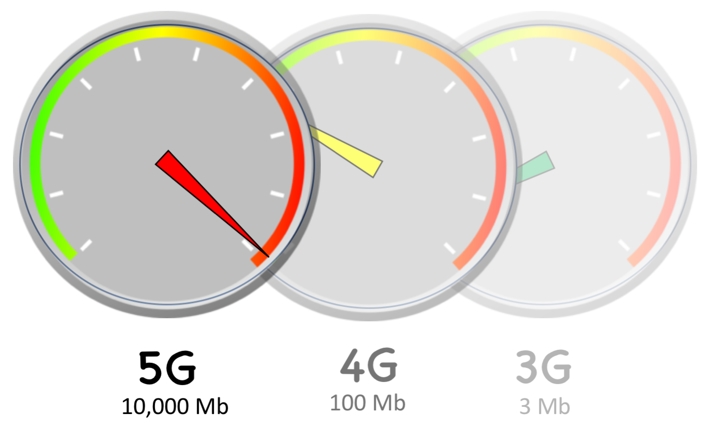

Networks are expanding rapidly, with 1000X increases in bandwidth, 100X increases in devices, and requirements for 1ms end-to-end round trips. FPGAs are critical in meeting these demands. With devices supporting 100Gb/second just gaining adoption, IEEE 802.3’s 400 Gb/s Ethernet Study Group (started in 2013) is expected to result in a standard this year for 400Gb/second. With efforts well underway to standardize 5G, we are nearing a point where data carried on wireless networks will exceed that of purely wire-based networks (Figure 2).

Figure 2. The move to 5G

Cloud Computing

IDC has said that the total data in the world was about 4.4 zettabytes in 2013, 8.6 zettabytes in 2015, and will grow to 44 zettabytes by 2020, when it has been predicted that there will be as many as 50 billion devices connected to the Internet. Cloud computing has demands in the data center, on the edge, and everywhere in between. It demands scale, throughput, performance/watt efficiency, flexibility, and low latency. Again, FPGAs are critical in meeting these demands.

The Computing Imperative: More Parallelism, Less Power, Greater Flexibility

To make sense of this flood of data, we need to automate decision-making, have real-time insights into what connected devices are telling us, and present interactive and intuitive user connections to this data. Without accelerated computing, scale-out of many applications (e.g., artificial intelligence) will prove impractical. New computing requirements demand more parallelism, lower power consumption, and a degree of flexibility never before seen in accelerators. To meet this need, hardware platforms—from the edge to the cloud—have been evolving to include mixtures of CPUs and accelerators. FPGAs play a critical role in the trend toward heterogeneous computing platforms that are highly parallel, power-efficient, and reprogrammable. In short, FPGAs enable hardware performance with the programmability of software. However, the FPGA programming model has typically been hardware-centric. As FPGAs become a standard component of the computing environment, with users expecting the hardware to be software-defined, they must be accessible not just by hardware developers, but by software developers.

FPGA Programming for a Software World

FPGAs have been around for years to solve hardware design problems. Their programmability was done exclusively in terms familiar to hardware designers instead of via any programming language designed for software development. New FPGA designs aimed at supporting software development instead of just hardware replacement designs, coupled with new software development tools, make FPGA programming worth a serious look by software developers.

This shift reminds us of the development of GPU programming methods, but with the advantage that FPGAs are not predesigned with a particular use in mind (i.e., graphics processing). This flexibility ushers in a new era in computing like none before it.

Completing the Solution Stack for FPGAs

Pioneers in using FPGAs as programmable accelerators have come a long way with very few tools to help them. They have lived with a foot in the world of hardware design as they programmed using a hardware description language (HDL) such as Verilog* or VHDL*. A few years ago, we surveyed people interested in FPGA development. A telling comment we heard was “There’s a community of FPGA developers out there; the [various FPGA] forums are both pretty active; and there’s a lot of literature available. That being said, it’s a community of mostly hardware engineers—and a software developer might have trouble having their questions answered.” While this is changing, the comment is still very important because fewer than one in 10 people surveyed felt comfortable programming in VHDL or Verilog despite expressing an interest in FPGAs. In the same group, over 90 percent of software developers knew C, C++, or OpenCL*, and over 75 percent said they would prefer to depend on libraries to exploit FPGA functionality. Therefore, it makes sense that Intel’s next generation of FPGA tools focus on C, C++, OpenCL, and libraries.

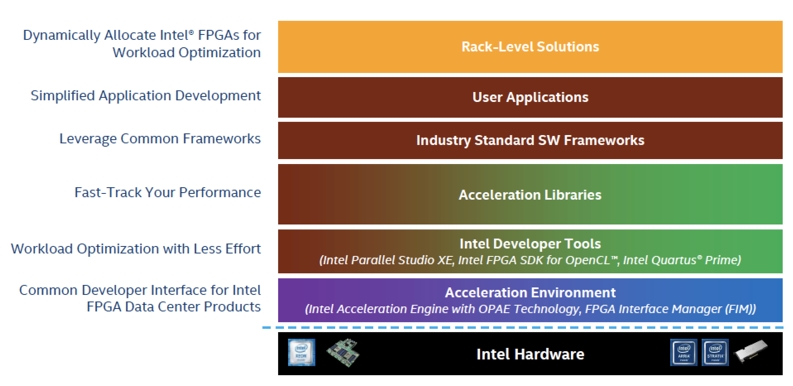

The software stack continues to rely on hardware flows as always, but the complete solutions stack (Figure 3) provides programming layers that give efficient but software-friendly interfaces. These new methods don’t abandon traditional FPGA tools—which remain available, and have improvements of their own. With a complete solutions stack for FPGAs, however, programming mirrors what we find in the CPU world—deep architectural expertise goes into compilers and libraries, which are accessible to programmers whose expertise is in other areas (i.e., science and engineering disciplines other than computer architecture). There is a separation of concerns between application developers using FPGAs via FPGA IP and those who develop the FPGA IP. A complete solutions stack makes this new way of thinking about FPGA programming possible.

Figure 3. Complete solutions stack empowers both software and hardware developers

Acceleration Stack for Intel® Xeon® Processors with FPGAs

Intel is investing in FPGA design, programming tools, and libraries to bring about this complete solutions stack for FPGAs. We will discuss how this is happening for cloud computing and particularly within the data center.

The acceleration stack for Intel® Xeon® processors with FPGAs (Figure 4) is a robust collection of software, firmware, and tools designed and distributed by Intel to make it easier to develop and deploy Intel FPGAs for workload optimization in the data center. It provides optimized and simplified hardware interfaces and software APIs so that software developers can focus on the unique value-add of their own solutions. Unprecedented code reuse is now possible with our common developer interface for Intel FPGAs. For even faster time to market, system-optimized reference libraries are provided for some domains, making it possible for application developers with little to no prior FPGA experience to use Intel FPGAs to supercharge performance.

Figure 4. Abstracting and managing FPGAs

An Elegant Solution for using FPGAs in a Software World

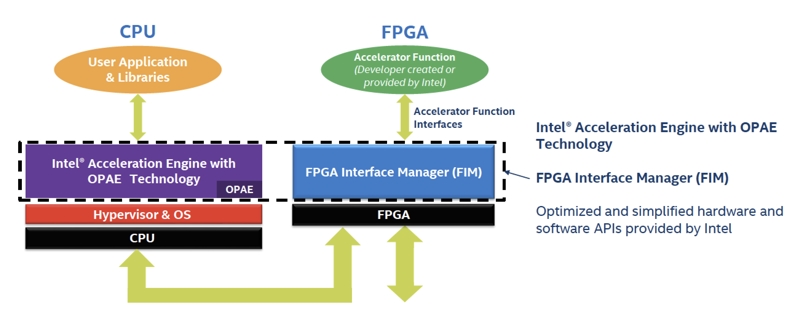

Intel aims to make FPGAs accessible through ordinary library calls. Early FPGA users successfully pursued similar approaches, but without portable standards. Consider a standard framework (Figure 5) that gives:

- Application developers a standard framework or SDK to indicate required functionality, and then provides FPGA-accelerated versions of that functionality. These developers continue to program in C, C++, Fortran, or some other high-level language with a C interface (e.g., Python*). This allows users to tap into the broader community of open source and commercial tools developers.

- Systems a standard way to access and use FPGAs they deploy. Data center operators, for instance, can use the open programmable acceleration engine (OPAE) in their acceleration stack to manage FPGAs in a data center environment.

- FPGA code developers a standard way to package and share accelerated routines. These developers will use OpenCL or some other method (e.g., an HDL/RTL such as VHDL or Verilog) to precisely tune and export their FPGA routines.

System owners use the framework to allow applications to load/unload the FPGA packages they need. Application developers use the framework to request and use FPGA packages, while still doing all programming in a high-level language. FPGA programmers can use low-level languages to create FPGA modules and package them for use by application developers.

Open Programmable Acceleration Engine (OPAE)

To help realize this acceleration stack in data centers, Intel helped create the Open Programmable Acceleration Engine (OPAE) (Figure 6). OPAE runs on the processor and handles all the details of the FPGA reconfiguration process. The OPAE also provides libraries, drivers, and sample programs that can be used to develop routines for the FPGA. It offers consistency across product generations by abstracting hardware-specific FPGA resource details. It’s designed for minimal software overhead and latency and offers a lightweight user-space library (libfpga). OPAE supports both virtual machines and bare-metal platforms.

The OPAE C API allows software systems to interface with FPGAs. The API provides device- or platform-specific extensions to model specific features of target architectures. For example, Intel has created a platform-specific API extension to expose a low-latency notification mechanism over the coherent memory interconnect of the Intel Xeon processor with Integrated FPGA, which is included as part of the Intel FPGA IP library.

Figure 5. Intel® Xeon® processor acceleration stack for FPGAs

Figure 6. Acceleration environment

A key capability of Intel FPGAs is the ability to dynamically reconfigure a portion of an FPGA while the remaining design continues to function. This allows us to reconfigure regions of the FPGA at runtime to implement different functionality as needed. The OPAE takes advantage of partial reconfigurability via distinct bitstreams. This is FPGA lingo for compiled FPGA programs. The FPGA interface manager (FIM, nicknamed blue bitstream) contains the logic to support FPGA accelerators, including the PCIe* IP core, the CCI-P* fabric, the onboard memory interface, and the management engine. The accelerator functional units (AFUs, nicknamed green bitstream) are the compiled versions of our custom functionality. An AFU is an accelerated computational routine, implemented in FPGA logic, which OPAE offloads to an Intel FPGA to improve performance. OPAE supports multiple slots for AFUs on the same FPGA. This makes it reasonable for us to think of AFUs as libraries of FPGA-accelerated functions that applications can load and use, with the ability to have multiple libraries (AFUs) active at the same time.

Intel also supports an AFU simulation environment (ASE), a code development and simulation tool suite available in the Intel® QuickAssist Accelerator Abstraction Layer Software Development Kit. It allows testing of OPAE-enabled software applications against AFU simulations. It aims to provide a consistent transaction-level hardware interface and software API that allows users to develop and debug production-quality AFU and host applications that can then be run on the real FPGA system without modifications.

FPGA IP Libraries: Acceleration Libraries

We mentioned earlier that there was plenty of opportunity for FPGA experts to continue using RTL/HDL to write highly-optimized code. However, what’s new for FPGAs is the ability to package such expertise in a standard way, essentially as a library, to be used by application developers who are not FPGA experts. We refer to such libraries as FPGA IP libraries, and the opportunities for writing them seem endless. FPGA IP libraries could help applications in diverse fields like machine learning, genomics, data analytics, networking, autonomous driving, beam forming, or anything else that can benefit by having an FPGA do it in hardware to give us enormous parallelism, low latency, and flexible and power-efficient capabilities. Not surprisingly, Intel has taken the initiative to write an FPGA IP library to help with the basic—but important—functionality to use FPGAs. This library helps bootstrap the rest of us and serves as an example of an FPGA IP library and how it easily integrates into the complete solution stack.

Intel FPGA IP Library

The Intel® FPGA IP Library is a lightweight user-space library that provides abstraction for Intel FPGA IP resources in a compute environment. Built on top of the driver stack that supports an Intel FPGA IP device, the library abstracts away hardware- and operating system (OS)-specific details and exposes the underlying Intel FPGA IP resources as a set of features accessible from within software programs running on the host.

These features include the acceleration logic preconfigured on the device, as well as functions to manage and reconfigure the device. The library enables user applications to transparently and seamlessly leverage Intel FPGA IP-based acceleration.

By providing a unified C API, the Intel FPGA IP library supports different kinds of integration and deployment models, ranging from single-node systems with one or more Intel FPGA IP devices to large-scale deployments in a data center. A simple use-case, for example, is for an application running on a system with an Intel FPGA IP PCIe device to easily use the Intel FPGA IP to accelerate certain algorithms. At the other end of the spectrum, resource management and orchestration services in a data center can use this API to discover and select Intel FPGA IP resources and then divide them up to be used by workloads with acceleration needs.

Intel FPGA SDK for OpenCL

For FPGA developers who want to create custom accelerator functions to run on Intel FPGAs, the acceleration stack provides the Intel® FPGA SDK for Open Computing Language (OpenCL). OpenCL is an industry standard, a C-based programming language that allows users to abstract away the traditional hardware FPGA development flow and use a faster, higher-level software development flow. With the Intel FPGA SDK for OpenCL, you develop FPGA designs in C using a high-level software flow. We can emulate OpenCL C accelerator code on an x86-based host in seconds, get a detailed optimization report with specific algorithm pipeline dependency information, or prototype the accelerator kernel on a virtual FPGA fabric in minutes.

Summary

Intel is leading the FPGA world with highly programmable FPGA solutions. The time is right to examine the programming aspects of FPGAs. Moore’s Law has given FPGA designers a lot to work with, and the designers, in turn, have used those transistors to add features with software programmability in mind. OpenCL has given a boost for connecting FPGA developers with application needs. And now Intel has released a framework to help system owners, application developers, and FPGA programmers interact in a standard way—a real advantage when using Intel FPGAs through a common developer interface, tools, and IP that make it easier to leverage FPGAs and reuse code.

Get Intel® SDK for OpenCL™ Applications for free here